Use a Nexmosphere hand gesture sensor to control content in Blocks

:!:WORK IN PROGRESS:!: This is an application note that describes an example how to use the Nexmosphere hand gesture sensor XV-H40 to navigate in some Blocks content.

This note assumes a controller with built-in serial to usb controller directly to a Pixilab Players USB port (i.e. XN-185), but it will work also with a serial controller connected over the network via a serial port server such as Moxa Nport.

Hardware requirements

- Nexmosphere XN-185 Experience controller. This is the 8-interface controller device, with USB connection.

- Nexmosphere XV-H40 Element, this is the sensor module that handles the image data processing.

- Nexmosphere S-CLA05 camera module

- Computer running Pixilab Player allowing us to hook up the sensors to Blocks.

- USB Capture interface (optional) video from the sensor and use that in Blocks content.

Wiring

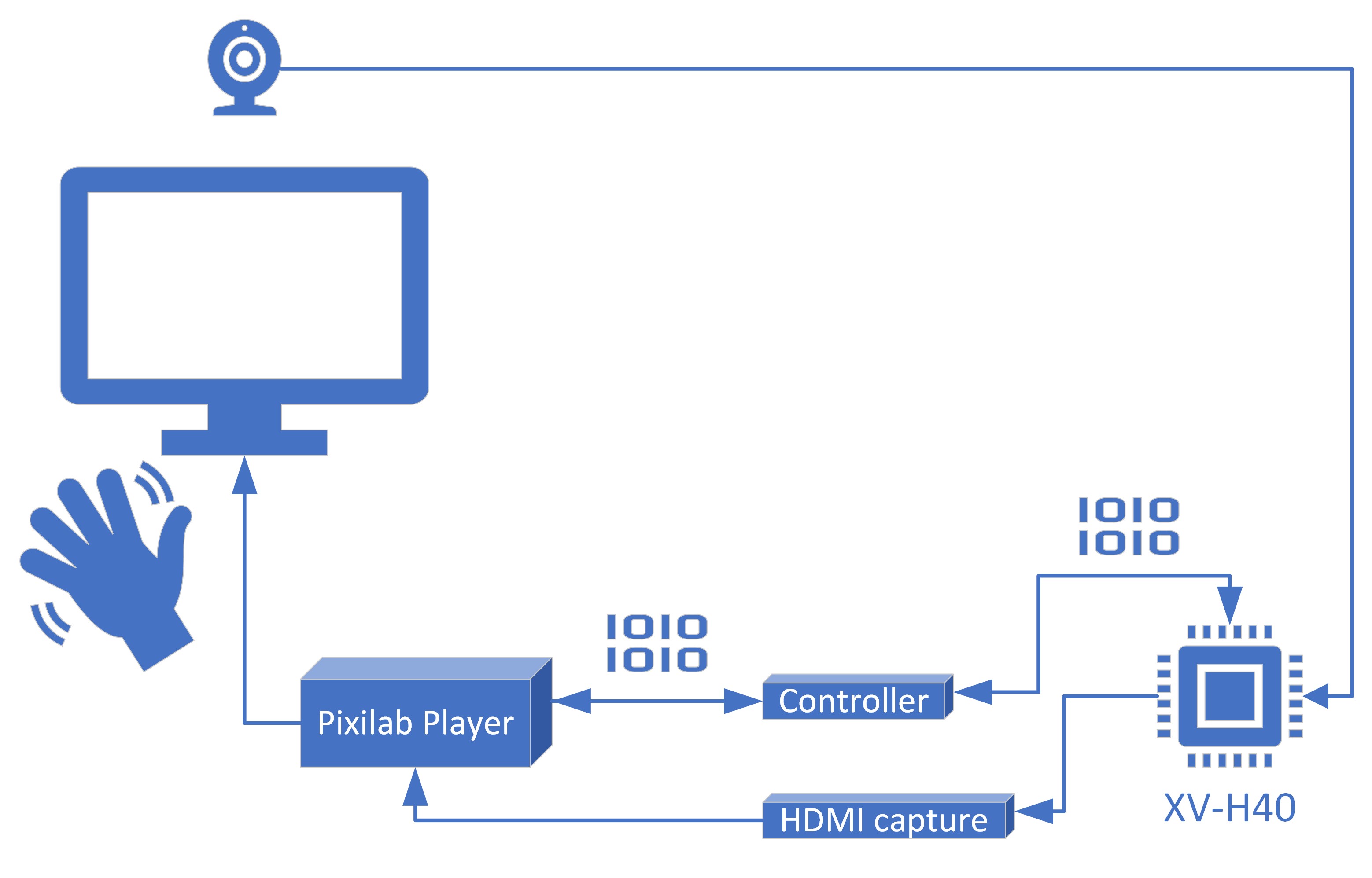

Follow the vendors manual for the wiring. This is a principal schema including the optional capture interface.

Sensor

All the Nexmosphere sensors are designed with simplicity in mind. This is by far the most advanced sensor at the time of authoring this article, still it is easy to get started. There is one significant difference with this element compared to others from Nexmosphere, some settings related to the sensors "AI-engine" seem to be stored with persistence.

The Driver

The existing Nexmosphere drivers have been updated with extensive support for the XV-H40 hand gesture sensor. Update/install the driver in any existing system to gain support for this device. Most required functions have been implemented as callables on the driver. Any other settings can be set by sending a custom command to the element by referencing the vendors device manual.

Activation Zones

Exposes the following callables:

- clearSingleZone, cleares a single zone with a specific ID.

- clearAllZones , cleares all zones at once

- setZone, set up a single zone with size and position parameters.*

- setZoneTriggerGesture, specify a specific gesture and optional direction to act as a trigger while using Activation Zones.

Exposes the following readonly properties:

- inZoneX, a Boolean per zone (1-9), can be use to bind a Classify behavior placed on a i.e. button to or used as a trigger in tasks. Becomes true while a hand is in zone.

- clickInZoneX, a Boolean per zone (1-9), can be used to bind a Press behavior placed on a button to or used as a trigger in tasks. Momentary becomes true if a hand is in zone and the selected trigger gesture is detected from the same hand.

(*this information is stored persistent in the HV-H40)

Gesture detection

Exposes the following readonly property:

- gesture a string property that exposes both the gesture and the direction in a single value. i.e. THUMB:UP, OPENPALM:CENTER, SWIPE:LEFT, can be used to bind behaviors to or as a trigger for a blocks tasks.

Position Tracking

Exposes the following readonly properties one set for hand ID1 and another for hand ID2: hand#X number, horizontal position, normalized 0-100% of cameras field of view as normalized 0-1 hand#Y number, vertical position, normalized 0-100% of cameras field of view as normalized 0-1 hand#Angle number 0-360degrees as normalized 0-1 hand#Size number, hand size, normalized 0-100% of cameras field of view as hand#Show Boolean, is true if the hand is being tracked. hand#IsFront Boolean, is true if the front of the palm is facing the camera hand#IsRight Boolean, is true it the hand is right hand. hand#Inverted Boolean, is true if the combination of IsFront and Is Right, used i.e to determine if a hand icon should be inverted or not.

General comments

Both gesture tracking and Activation Zone triggering works very well. For position tracking the resolution of the data is not quite fast enough, even though it work. The fastest reporting the Controller is capable of is 250ms interval. That is not enough for a nice user experience. That is where it could make sense to combine a captured video stream from the device with the drivers properties to create a good user experience.

Use a captured video stream

The device can store a custom hand icon and a custom background. (See the vendors manual) If we use a png Icon for the hand in ie white outline and a background that is black or vece versa, we can use the blens mode settings in blocks to maje the captured video transparent and sit as a layer in front of the Blocks content. With this method we get a more seamless hand tracking experience even though it is not as flexible since the icon and background is pretty fixed once uploaded to the sensor.